What You Need To Know About Meta S Llama 2 Model Deepgram

Llama 2 Community License Agreement Agreement means the terms and conditions for use reproduction distribution and. Llama 2 is being released with a very permissive community license and is available for commercial use The code pretrained models and fine-tuned models are all being. Llama 2 is also available under a permissive commercial license whereas Llama 1 was limited to non-commercial use Llama 2 is capable of processing longer prompts than Llama 1 and is. With Microsoft Azure you can access Llama 2 in one of two ways either by downloading the Llama 2 model and deploying it on a virtual machine or using Azure Model Catalog. A custom commercial license is available Where to send questions or comments about the model..

This notebook provides a sample workflow for fine-tuning the Llama2 7B parameter base model for extractive Question. Merge the adapter back to the pretrained model Update the adapter path in merge_peft_adapterspy and run the script to merge peft adapters back to. This jupyter notebook steps you through how to finetune a Llama 2 model on the text summarization task using the. CUDA_VISIBLE_DEVICES0 python srctrain_bashpy --stage rm --model_name_or_path path_to_llama_model --do_train -. N Merge the adapter back to the pretrained model n Update the adapter path in merge_peft_adapterspy and run the script to merge peft adapters back..

How To Get Access To Llama 2 Via Huggingface By Yibin Ng Medium

Llama 2 is here - get it on Hugging Face a blog post about Llama 2 and how to use it with Transformers and PEFT LLaMA 2 - Every Resource you need a compilation of relevant resources to. Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully support the launch with comprehensive integration. App Files Files Community 40 Discover amazing ML apps made by the community Spaces. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 70B pretrained model. Create a new AutoTrain Space 11 Go to huggingfacecospaces and select Create new Space 12 Give your Space a name and select a preferred usage..

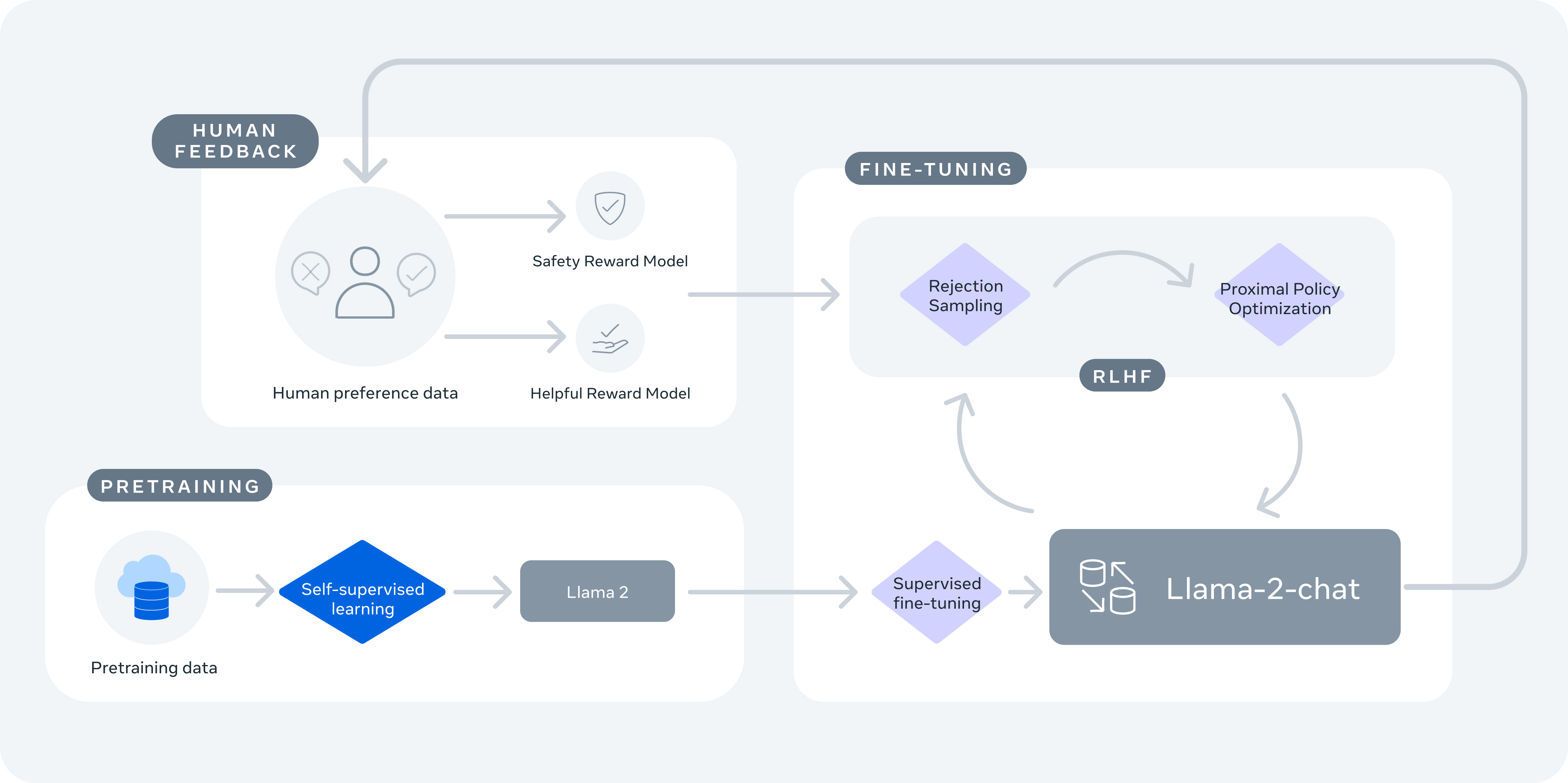

If you want to use more tokens you will need to fine-tune the model so that it supports longer sequences. LoRA which stands for Low-Rank Adaptation of Large Language Models operates on a. Use the latest NeMo Framework Training container This playbook has been tested using the. Fine-tune LLaMA 2 7-70B on Amazon SageMaker a complete guide from setup to QLoRA fine-tuning and deployment. Single GPU Setup On machines equipped with multiple GPUs. Fine-tuning is a subset or specific form of transfer learning In fine-tuning the weights of the entire model. Here we focus on fine-tuning the 7 billion parameter variant of LLaMA 2 the variants are 7B 13B 70B and the..

Komentar